Anomaly Detection for SREs and DevOps

I’m going to cover anomaly detection by answering the question, “What are the anomaly detection concepts an SRE and DevOps engineer should know in order to help them ensure more uptime and perform root cause analysis more efficiently?”

What is anomaly detection?

Anomaly detection, sometimes referred to as “outlier detection,” is the process by which machines attempt to identify outliers that deviate from a “normal” or expected pattern of behavior.

Labeled vs unlabeled data, what’s the difference?

Before we get into the different types of anomaly detection, it helps to make sure we understand the difference between labeled and unlabeled data. Why? Because data is what trains machines to detect and make judgements about what constitutes an anomaly. Here’s a simple example to illustrate the difference:

An unlabeled inventory of assets might just tell us if a system is a “physical server”, “VM”, or “container.” A labeled data set would include more useful information like “location,” “OS,” “CPU,” “RAM,” “package version,” “build number,” etc. From a machine learning perspective, the more labels or tags a piece of data has, the more likely it will be able to produce an accurate insight about unlabeled data it is asked to evaluate. Put another way, the denser and relevant the data set, the better the training and resulting correlations.

What are the different types of anomaly detection?

Generally speaking there are three types of anomaly detection methods worth understanding…

Unsupervised anomaly detection

In this method of anomaly detection you are dealing with unlabeled data. You are basically asking the machine to decide what doesn’t look “normal” by finding patterns or clusters within the data. For example: We might give the machine a dataset of half a million points that measured the CPU utilization of a system every minute for a year. The machine will point out which data points might be outliers and which look “normal,” but it’ll be up to a human to make the final judgement as to whether or not they are actually outliers. For example, maybe high CPU utilization was expected at certain times because of seasonal load and that particular pattern should be classified as “normal.”

At SignifAI, we are combining different approaches. First, we are using multiple outlier algorithms which helps us detect a variety of anomaly conditions. DBSCAN, Hampel Filter, Holt-Winters and ARIMA (X, SA) to name a few. Those are being used mostly on metric data, but also help us in smooth hard streams before pushing them into the next anomaly tier. Other types of methods SignifAI uses are mostly based on Supported Vectors Machines (or SVM) type algorithms, as well as Bayesian and Probabilistic modeling.

Bayesian modeling is a way of calculating the likelihood of some observation, given the data you’ve already seen. It allows you to declare your statistical beliefs about what your data should look like before you look at the data. These beliefs are known as ‘priors’. You can declare one for each of the parameters you’re interested in estimating. Bayesian modeling looks at these priors and the observed data, and calculates a distribution for each of those parameters. These are known as ‘posterior distributions’. Part of the benefit of probabilistic programming, relative to comparable Bayesian modeling in the past, is that you don’t need to know anything about how that calculation happens. There are many advantages in working with these models but for us the most important one is that we get a way to encode the expert knowledge about the data into the model and provide context. This is very important for an SRE because as an expert you can know that there is no chance at all of certain observations. The other statistical advantage is that there are no limitations on which distributions can be used to model the use case.

Supervised anomaly detection

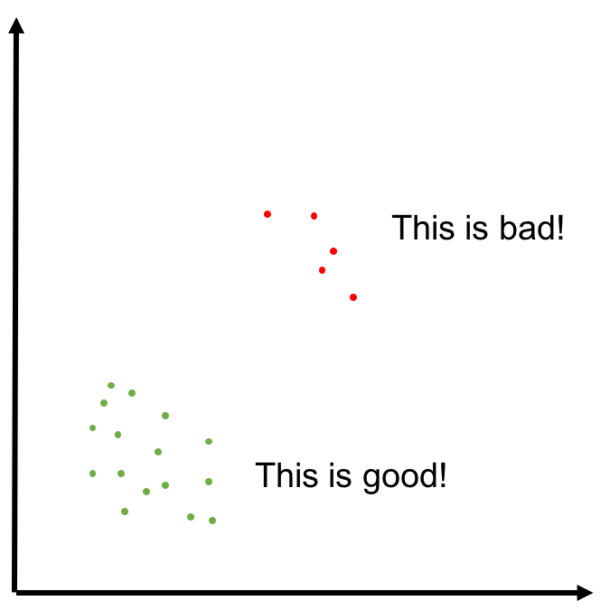

In this type of anomaly detection you train the machine to spot anomalies by feeding it two sets of data. The first set of data tells the machine what sort of behavior is “good.” The second data set tells the machine what sort of behavior should be considered “bad.” If we revisit the previous example, with supervised anomaly detection, the machine has clear instructions on how determine what an outlier is. For example, the labeled data set might include the acceptable utilization percentages at any given minute during the year to account for seasonal load.

At SignifAI, we wanted our machine intelligence platform to be able and work right out-of-the-box with minimal training and user interaction. The reason for this was that although the benefits are huge, the typical on-call SRE is going to lack AI expertise and not have the time to create large training datasets to train the monitoring system.

When an expert SRE on-call detects an anomaly, the last thing they want to be dealing with is drilling into graphs and/or events and label them for future training data. The alternative would be to review and label very large, historical datasets. However, this also requires a lot of effort and time. Because of that – at SignifAI have chosen not to use supervised learning as a technique for real-time technical operations use cases. Where we have found it very relevant, is in allowing the user to provide feedback to correct an automatic detection after-the-fact. Based on that user feedback, SignifAI adapts (or more specifically “trains”) on how it can more accurately handle future detections.

Semi-supervised anomaly detection

In this final method for detecting anomalies, a model of what should be considered “normal” is generated from a dataset and then evaluated against another dataset to see what the likely outliers would be. It’s considered “semi-supervised” because the dataset that is used to supervise the comparison can be thought of as an assumption of what might constitute as “bad,” but still requires some degree of human validation.

How is anomaly detection useful to DevOps and SREs?

Monitoring “unknown unknowns”

Anomaly detection is great at uncovering unknown issues lurking within your systems that might threaten system availability. It is often the case that there is plenty of monitoring data being collected, but it just isn’t being analyzed effectively. And more importantly, correlated against other data sets which aren’t compatible to detect “abnormal” behavior.

Alert noise

Sometimes referred to as “alert fatigue,” alert noise is something almost all organizations deal with when they employ a variety of tools to monitor thousands of metrics and events. Ask any SRE who has ever been on call to tell you how frustrating it is to deal with alerts that weren’t worth acknowledging (false positives) and critical alerts that got overlooked (true positives) because they got lost in the “noise.” Anomaly detection, when applied to alerts can help aggregate them into “incidents” or higher order alerts so you end up with less alert volume. And those that you do get, will likely be alerts you need to react to.

Detecting anomalies in logs

If you are using tools like Splunk, Elastic or SumoLogic to manage and monitor your logs, anomaly detection can be useful in helping reduce log lines into higher order categories or groups to enable the recognition of any patterns that might signal problems. Training data sets can involve a historical scanning of previous logs, as well as in a supervised fashion, by labeling specific log lines as outliers or predictors of “bad things to come.”

Detecting anomalies in metrics

Applying anomaly detection to time-series data is a classic use case. A few APM and infrastructure monitoring vendors like Datadog and New Relic have recently introduced this feature into their services. Depending on the metric and use case, teams may want to evaluate if a datapoint is an anomaly based on:

Recency: Is this an anomaly compared to the last 5 minutes of data I have?

Historically: Is this an anomaly compared to the data I have collected since the system first came online?

Seasonality: Is this normal for a specific time of the day or year? (For example: Black Friday.)

Correlated: Is this anomaly conditionally ok because of the value of another metric or variable? (For example: A build process running on the machine.)

Anomaly detection as it applies to metrics is definitely not a one size fits all proposition and teams should choose their algorithms accordingly.

Detecting anomalies across data types

So far we have covered different solutions that support some degree of anomaly detection. However, a more interesting question to ask now is:

Is it possible to detect an anomaly output from Splunk and combine it with an anomaly output from Datadog?

With SignifAI there are couple of ways to detect multiple anomalies and the relationships between them. First, it is important to remember that the SignifAI platform is completely vendor and tool agnostic. This means SignifAI can take the anomalies detected in your log, metrics or events monitoring tools and correlate between the multiple anomalies just like another stream of data. What if you want to determine causation? What can be said about one anomaly affecting another anomaly? Or even better, determining if it is the root-cause of the other anomaly? Well, because SignifAI is using Bayesian structural time-series models, it is possible to try and predict the counterfactual, i.e., how one specific anomaly stream would have evolved after the other anomaly stream if the second anomaly stream had never occurred.

Should you build your own anomaly detection system or use your monitoring tools?

Despite the mathematics having been around for a long time, it was only until recently that APM, log and infrastructure monitoring vendors began to incorporate anomaly detection functionality into their tools. The majority of the implementations so far have been rather basic. The other limitation of course is that they are siloed. They can only detect anomalies within the data sets they can consume, for example logs or metrics. If we recall, the more relevant training data a machine has at its disposal, the more powerful the correlations and the greater the accuracy of anomalies it can detect.

Aside from these tools limiting their anomaly detection to their respective data domains, you should also consider the development and maintenance costs associated with building your own system. In-house expertise is also required to develop a robust anomaly detection system that can yield accurate and actionable results. It’s often better to buy a subscription to a service if you don’t have the in-house expertise, budget or highly specialized use case that merits going at it alone.