With Clarity Comes Focus: How to Reduce SRE Team’s Cognitive Load

With the success of the Google SRE book, more and more teams are transitioning from a traditional sysadmin/”ops team” model for managing production incidents towards the SRE ideal - an empowered, engineering-focused team that spends at least 50% of their time on creative work that helps teams move towards more automated and sustainable infrastructure. But cutting down to half of the current time your team spends fire-fighting and doing other traditional “ops” tasks isn’t easy - it requires bold long-term vision, strategic planning, and the right tools for your team.

With this challenge in mind, a natural inclination for SRE leaders is to go searching for data - how rough is the situation right now? Where do their teams measure up in terms of some common SRE KPIs, like mean time to understand (MTTU) and mean time to respond (MTTR) to incidents? How frequently are their team members woken up at night with an ops problem?

Luckily, this information is probably recorded in teams’ existing incident management platforms - but busy SRE teams don’t have a ton of time to comb through it. This is why many AIOps and incident management tools have introduced complex analytics features, happily churning out histograms and pie charts for curious team members to peruse.

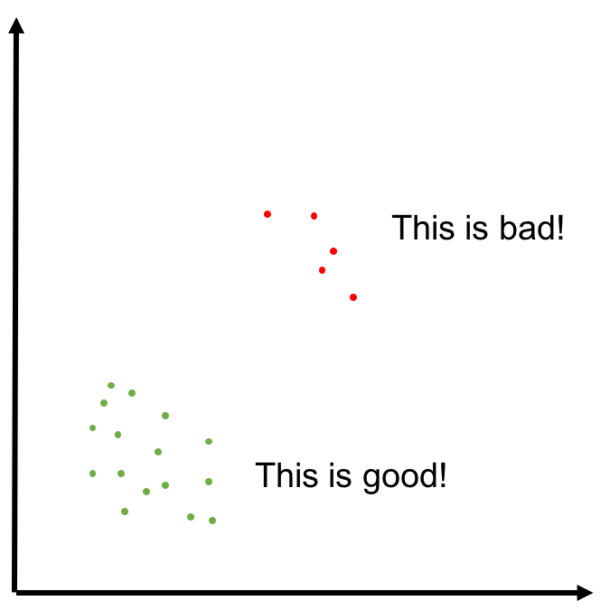

Although data visualization is important, in production systems with lots of noisy alerts, it may not be as helpful as we’d hope: Underlying issues can hide in “good” data.

Let’s use mean time to acknowledge as an example. A decrease in MTTA, which is easily determined from the per-incident statistics from your platform, could look like improvement for an SRE team. But maybe the reason for this drop is that some of your systems have started producing some flapping alerts, leading the team to just ack as soon as they’re paged - the SRE equivalent of subconsciously hitting the snooze button or mass-deleting emails. In order to find this truth, you’ll have to dig deeper into the data or talk to a team member.

It’s hard to maintain a single source of truth

Most modern production systems include a bevy of tools that all have their own definitions of “incidents” or “alerts,” making it tricky to understand analytics that that include data points across multiple sources. For example, let’s look at a simple metric - the number of incidents a team sees per week. Does this include:

Every alert that triggers in a monitoring system?

Every incident a team member is paged for?

Repeats of the same alert/incident?

Alerts that resurface after a snooze period?

Multiple incidents that occur at the same time for one underlying issue?

Only incidents that actually require human action?

Chances are, analytics for the “number of incidents” are going to mean different things in each tool, and any one of those numbers may or may not be the most meaningful choice for a team.

Your team has the most valuable perspective

One of the foundational principles of Kaizen, the Japanese principle of continuous improvement, is that team members at all levels should be involved in the evolution of process. This is because even with the fanciest analytics platform in the world, the people doing day-to-day work still have the greatest insight into the hangups and struggles of the process. Using incident analytics to establish a baseline is a great idea, but the more nuanced steps toward SRE greatness don’t require fancier graphs - they just require talking to the team.

A clearer picture

Built-in tools to help you visualize your data can be useful, but in a noisy production environment, an overload of graphs and charts will actually make life harder. An underlying reason for the potential issues with an analytics-first approach to SRE process improvement is that most production systems still have lots of noisy alerts.

Instead of looking for new ways to visualize the noise, what if there was a way to create greater clarity at the alert level first?

Through layers of machine learning-driven filters and correlation logic, SignifAI helps consolidate and streamline incidents across all of your platforms so your team only gets paged for what matters.

With this clarity and context, you’ll be able to answer questions about your team’s progress and production environment health much more quickly, and spend less time pushing through the noise.